Your AI coding assistant can write impressive code. But it can’t read your company’s database schema, your internal documentation, or your production logs. It doesn’t know your team’s conventions, your deployment workflows, or why that weird workaround exists in the payment service.

This is the context gap. And it’s why AI tools feel powerful in demos but limited in real work.

The Model Context Protocol (MCP) is changing that. Not with better models or smarter prompts, but by standardizing how AI connects to the actual systems where your work lives.

Here’s what you need to know, what you can do today, and why this matters more than most AI announcements.

The problem MCP solves#

AI assistants live in a bubble. They see what you show them: the current file, maybe the conversation history, perhaps a few documentation snippets you paste in.

What they don’t see:

- Your database tables and relationships

- Your API schemas and internal services

- Your Git history and commit patterns

- Your company’s documentation and decision records

- Your production metrics and error logs

- Your team’s code conventions and architectural patterns

Every time you switch contexts, you’re starting over. The AI has to relearn. You spend time explaining things it should already know.

The traditional solution: Build custom integrations. Write a plugin that connects Claude to your database. Write another for ChatGPT. Another for Cursor. Maintain them all as things change.

This doesn’t scale. Three AI tools, five data sources, fifteen custom integrations. Then a new AI tool launches and you start over.

MCP solves this by standardizing the connection layer. Build once, use everywhere.

What MCP actually does#

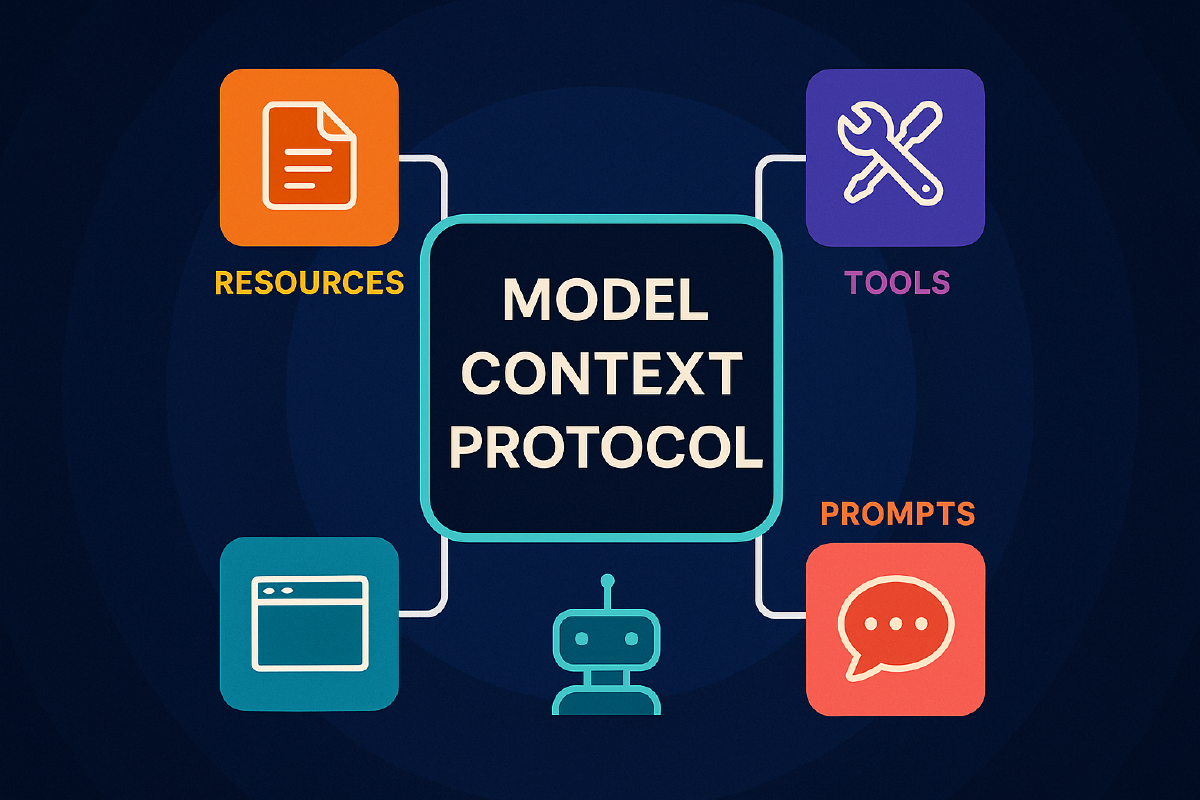

MCP is an open protocol that lets AI applications connect to three types of capabilities:

1. Resources (what AI can read)

Your databases, files, documentation, APIs. Anything that provides context the AI needs to understand your work.

Example: Your database exposes its schema as an MCP resource. Now Claude can see your table structure without you pasting it into the chat.

2. Tools (what AI can do)

Search operations, API calls, data queries, workflow triggers. Actions the AI can take on your behalf.

Example: A search tool lets the AI query your documentation. A database tool lets it run read-only queries. A Git tool lets it analyze commit history.

3. Prompts (how AI should think)

Templated workflows for specific tasks. Structured ways to guide AI behavior for your team’s common patterns.

Example: A code review prompt that includes your team’s specific conventions. An incident analysis prompt that knows your logging structure.

Understanding the architecture (if you’ve built APIs, you’ll get this)#

If you’ve worked with REST APIs, MCP will feel familiar. It’s the same pattern applied to AI integrations.

REST API thinking:

- Server exposes endpoints (GET /users, POST /orders)

- Client makes requests to those endpoints

- Standard protocol (HTTP) means any client can talk to any server

- Authentication and authorization control access

MCP thinking:

- Server exposes resources, tools, and prompts

- Client (AI application) discovers and uses those capabilities

- Standard protocol (JSON-RPC) means any MCP client can talk to any MCP server

- Host (container for the AI) enforces permissions and approval

The three-layer architecture#

1. Server (your systems)

The MCP server wraps your existing systems and exposes them through a standard interface. This is like building a REST API for your database, except instead of HTTP endpoints, you’re exposing MCP resources and tools.

Example: Your PostgreSQL database gets an MCP server that exposes:

- Resources: schema definitions, table structures

- Tools: query execution (read-only to start)

- Prompts: common analysis patterns your team uses

2. Client (the connection layer)

The MCP client sits between the AI and the servers. It discovers what’s available, routes requests, and handles responses. Think of it like an API gateway, but for AI integrations.

The client handles:

- Connection management to multiple servers

- Capability negotiation (what does this server support?)

- Message routing and response handling

- Security boundaries enforcement

3. Host (the orchestrator)

The host is the container that manages everything. It controls which servers the AI can access, enforces approval flows for sensitive operations, and mediates access to the AI model itself.

This is the security and policy layer. Even if a server offers dangerous tools, the host can require explicit user approval before the AI can invoke them.

How it compares to other integration patterns#

Like REST APIs:

- Standard protocol that anyone can implement

- Server/client architecture with clear separation

- Discoverability (list available endpoints/resources)

- Stateless individual operations, stateful sessions

Like GraphQL:

- Clients can discover the schema (what’s available)

- Type-safe interactions with JSON Schema validation

- Flexible queries for exactly what you need

Like OAuth:

- Explicit permission and consent flows

- Scoped access to resources

- User remains in control of what AI can access

Unlike traditional APIs:

- Bidirectional communication (servers can request things from clients)

- Built-in support for streaming responses

- Designed specifically for AI-to-system integration

- Security model assumes untrusted AI behavior

The transport layer (how data moves)#

MCP uses two primary transports:

stdio (standard input/output): For local processes. The MCP server runs on your machine, communicates through stdin/stdout. Simplest and most secure for desktop applications. This is how Claude Desktop connects to local servers.

Streamable HTTP: For remote servers. JSON-RPC over HTTP with server-sent events for streaming. Use this when you need team-wide access to a server or want to deploy servers in the cloud.

Why this matters: Start with stdio (local, simple, secure). Move to HTTP when you need remote access or horizontal scaling.

The protocol is simple (intentionally)#

MCP uses JSON-RPC 2.0. If you’ve worked with JSON APIs, the message format will look familiar:

{

"jsonrpc": "2.0",

"method": "resources/list",

"id": 1

}

The simplicity is deliberate. Easy to implement, easy to debug, easy to extend.

Why this architecture works#

Separation of concerns: Servers don’t need to know about AI models. AI applications don’t need to know about your database internals. The protocol is the contract between them.

Composability: One AI application can connect to multiple servers. One server can serve multiple clients. Mix and match based on needs.

Security boundaries: Servers are isolated from each other. The host enforces what the AI can access. Sensitive operations require explicit approval.

Ecosystem effects: When everyone builds to the same protocol, servers become reusable assets. Your PostgreSQL MCP server works with Claude, ChatGPT, and Gemini. Build once, benefit everywhere.

How to start using MCP today#

This is the important part. You don’t need to build MCP servers to benefit from MCP. Start by using what exists.

Step 1: Install an MCP-compatible client#

Claude Desktop is the easiest starting point. Download it, and you already have an MCP client ready to go.

Cursor supports MCP through Claude Desktop integration. If you’re using Cursor for coding, this path makes sense.

Other options: Zed, Windsurf, and Sourcegraph Cody all support MCP. Pick the tool you already use.

Step 2: Add your first MCP server#

Start simple. The filesystem server lets Claude read your local files.

What this gives you: Instead of copying and pasting code into Claude, you can say “read the authentication module and suggest improvements.” Claude accesses the file directly, sees the full context, and gives better answers.

Five minute setup:

- Install the filesystem MCP server

- Configure Claude Desktop to use it

- Point it at your project directory

- Now Claude can read your actual codebase

Step 3: Connect to your databases#

The PostgreSQL MCP server (and similar for other databases) exposes your schema and enables read-only queries.

What this changes: You can ask “show me all users who signed up in the last week but haven’t completed onboarding” and Claude queries your database directly. No copy-paste, no context switching.

The right way to do this: Start with read-only access. Use environment variables for credentials. Test on development databases first.

Step 4: Add Git context#

The Git MCP server exposes repository history, branches, and diffs.

What becomes possible: “Analyze the last ten commits to the payment service and summarize what changed.” Claude reads the actual Git log and gives you a coherent summary.

Step 5: Connect to your tools#

Existing MCP servers cover Google Drive, Slack, GitHub, Postgres, and more. The MCP Registry (in preview) is where you find community servers.

Pick what matters to your workflow. Documentation? Customer data? Production metrics? Connect the systems where your context lives.

What changes when AI has real context#

This isn’t just convenience. It’s a fundamental shift in how you work with AI.

From manual context to automatic context#

Before: You spend five minutes explaining your database structure, pasting schema definitions, copying relevant code into the chat.

After: Claude already sees your schema. You skip straight to the actual question.

The compounding effect: Over dozens of interactions per day, you save hours of context-gathering work.

From shallow answers to deep understanding#

Before: AI suggests generic solutions because it doesn’t know your actual constraints and patterns.

After: AI sees how your team actually structures code, what conventions you follow, what trade-offs you’ve made. Suggestions are specific to your reality.

The quality shift: Fewer “that won’t work here” moments. More “that actually fits our architecture.”

From single-turn to multi-step workflows#

Before: Every task is a new conversation. AI has no memory of what you’re working on or why.

After: AI can follow multi-step workflows that span files, systems, and contexts. It remembers the goal and carries it forward.

Example: “Analyze the performance metrics for the API, identify the slow endpoints, check the database queries for those endpoints, and suggest optimizations based on our actual schema.”

That’s four different context sources (metrics, API code, database, schema) orchestrated into one coherent workflow.

When to start building your own MCP servers#

Once you’ve used MCP and see the value, you’ll spot the gaps. Systems specific to your company. Internal tools that don’t have public MCP servers. Workflows unique to your team.

That’s when you build.

The right first server to build#

Your internal documentation. If you have Confluence, Notion, or internal wikis, an MCP server that exposes them as resources solves an immediate problem.

What it enables: Developers can ask AI questions about your internal systems and get answers sourced from your actual docs. No more hunting through wiki pages.

Technical complexity: Low. Resources are read-only, security is straightforward, and the value is immediate.

The second server: your APIs#

Expose your internal API schemas and enable AI to understand how services connect.

What becomes possible: “Show me how to call the user service to update preferences” gets a response based on your actual API, not generic examples.

The integration pattern: Start with read-only schema exposure. Add safe test operations. Never expose production-write operations without explicit approval flows.

Building with the official SDKs#

Official SDKs exist for TypeScript, Python, Java, Kotlin, C#, Go, PHP, Ruby, Rust, and Swift. Pick your stack and start.

The architecture is simple:

- Expose resources through

resources/listandresources/read - Declare tools through

tools/listand handle calls throughtools/call - Define prompts that guide AI behavior for your specific use cases

Use the MCP Inspector to test your server. Connect to it, browse resources, invoke tools, see what the AI sees. Essential for debugging.

Security patterns that matter#

1. Start local, go remote carefully

Local servers (stdio transport) are simpler and more secure. They run on the developer’s machine with their permissions.

Remote servers (HTTP transport) enable team-wide access but require proper authentication, authorization, and audit logging.

2. Read-only first, mutations later

Resources are safe. Tools that modify data are not. Start with exposure, add write operations only when you have proper approval flows.

3. Never trust inputs

Validate everything. Use JSON Schema for tool parameters. Sanitize inputs. Assume the AI might be tricked into sending malicious requests.

4. Handle credentials properly

Environment variables for development. OS keychains for local desktop apps. Proper secret management for remote servers. Never in code, never in logs.

Why OpenAI and Google adopted this so fast#

MCP launched in November 2024 from Anthropic. By March 2025, OpenAI adopted it. By April, Google announced support.

When competing AI companies agree on a standard in months, not years, pay attention.

The reason: Everyone faces the same integration problem. Claude needs to connect to databases. ChatGPT needs to connect to databases. Gemini needs to connect to databases.

The old approach: Build custom connectors for each AI tool and each data source. Multiplication of effort.

The MCP approach: Build one server that exposes your database through a standard protocol. Every MCP-compatible AI tool can use it immediately.

The ecosystem effect: As more tools adopt MCP, every MCP server you build becomes more valuable. As more servers exist, every AI tool that adopts MCP becomes more useful.

This is infrastructure-level network effects.

What this enables that wasn’t possible before#

The real shift isn’t about making current work easier. It’s about making new patterns possible.

Contextual code review#

AI that reviews code with full access to:

- Your architecture decision records

- Previous similar changes and their outcomes

- Production metrics for affected services

- Team conventions and style guides

This isn’t generic linting. It’s review that understands your actual system and suggests improvements based on what you’ve learned, not what’s theoretically best.

Predictive debugging#

When an error occurs, AI with MCP access can:

- Read the error logs from your monitoring system

- Analyze the relevant code with full repository context

- Check similar past incidents and their resolutions

- Query the database state at the time of the error

- Suggest fixes based on your actual patterns

From hours to minutes. The context gathering that used to take most of the debugging time happens automatically.

Architectural coherence#

AI that can enforce architectural patterns by:

- Seeing your actual service boundaries and dependencies

- Understanding the intent behind your design decisions

- Catching violations as they’re written, not in review

- Suggesting alternatives that fit your established patterns

This moves from reactive to proactive. Instead of fixing architectural drift, you prevent it.

Knowledge continuity#

When a developer leaves or moves teams, their context doesn’t disappear if it’s encoded in MCP servers. The AI has the same access to systems, docs, and patterns.

Onboarding acceleration: New developers get answers sourced from actual systems, not just wikis that might be outdated.

For managers: the strategic opportunity#

If you’re leading a team or organization, MCP represents more than a technical standard. It’s a forcing function for better infrastructure.

The immediate productivity play#

Week 1: Install Claude Desktop for your team. Add filesystem and Git MCP servers. Developers can now ask AI about your actual codebase.

Week 2-4: Add database MCP servers (read-only, development instances). Connect to internal documentation.

Month 2: Measure time saved on context gathering, debugging, and code review.

The ROI is quick and measurable. Developers spend less time hunting for context and more time solving problems.

The platform investment#

MCP forces you to think about your systems as APIs. What should be exposed? What’s the right level of abstraction? What are the security boundaries?

This work pays dividends beyond AI. Better-defined interfaces, clearer boundaries, improved documentation. You get organizational clarity whether or not MCP becomes the dominant standard.

The competitive positioning#

AI adoption is uneven across teams. The constraint isn’t model quality, it’s integration with real work.

Teams with good MCP infrastructure can use AI effectively. Teams without it are stuck with generic, context-free interactions.

This creates meaningful differentiation in productivity, quality, and velocity.

The talent development angle#

Engineers who understand how to build, secure, and scale MCP integrations are developing valuable skills.

This is infrastructure-level knowledge that transfers across companies. It’s not framework-specific or company-specific. It’s fundamental to how AI connects to systems.

Investing in team education here compounds. These skills become more valuable as the ecosystem matures.

The broader pattern: context is infrastructure#

MCP is part of a larger shift. AI isn’t just about better models. It’s about better connections between models and the systems where work happens.

We’re moving from:

- Isolated AI interactions to connected workflows

- Generic suggestions to context-specific guidance

- Manual context gathering to automatic context access

- Single-turn conversations to multi-step orchestration

This is the infrastructure layer for AI-native development. Just like REST APIs became infrastructure for web services, MCP is becoming infrastructure for AI integration.

The companies and teams that recognize this early and build the right connective tissue will have a sustained advantage.

What comes next#

Near-term (Q4 2025):

- MCP 1.0 spec release (November 25, 2025)

- Wider IDE integration as standard feature

- Improved tooling for building and testing servers

- Enterprise adoption at scale

Medium-term (2026):

- MCP becomes expected, not optional

- Security and compliance frameworks mature

- Performance optimizations and caching patterns

- Vertical-specific server ecosystems emerge

Long-term trend: AI context shifts from “what you paste in the chat” to “what the AI has access to through proper integrations.”

The quality of AI assistance becomes proportional to the quality of your MCP infrastructure.

For developers: the career angle#

What’s valuable right now:

- Understanding how to use existing MCP servers effectively

- Building servers for gaps in your team’s workflow

- Implementing security patterns correctly

- Designing integrations that scale

What becomes valuable:

- Deep expertise in MCP architecture and best practices

- Domain-specific integration knowledge (healthcare, finance, etc.)

- Platform-level thinking about how AI connects to systems

- Security and compliance for AI integrations

The skill combination that matters: Understanding both AI capabilities and production systems. How to give AI the right context without compromising security. How to design integrations that teams actually use.

This is infrastructure work. It’s less flashy than training models but more durable and more broadly applicable.

Start now, build as you go#

If you’re a developer:

- Install Claude Desktop this week

- Add filesystem and Git servers to your workflow

- Notice where you still need to manually provide context

- Build MCP servers for those gaps

- Share what you build with your team

If you’re a manager:

- Set up MCP infrastructure for your team this month

- Measure time saved on context gathering

- Identify team-specific systems that need servers

- Invest in building those integrations

- Make MCP literacy part of onboarding

The best time to start was six months ago when MCP launched. The second best time is today.

The teams that move now will have compound advantages as the ecosystem matures. Not because they predicted the future, but because they built the infrastructure that makes AI actually useful for real work.

Get started:

- MCP introduction and documentation

- Official servers repository with examples

- MCP Inspector for testing

- Claude Desktop download

The gap between AI demos and AI productivity is context. MCP is how you close it.