Alright, you read the intro guide. You connected an LLM to a vector database, stuffed it with your documents, and built your first Retrieval-Augmented Generation (RAG) app. It works. You ask a question, it spits out an answer backed by your data. High fives all around.

Then you show it to a real user.

They ask a question with a typo. The RAG returns garbage. They ask a question that requires info from two different documents. The RAG gets confused. They ask, “What are the key differences between product A and B?” and it just dumps the full spec sheet for product A.

Suddenly, your shiny AI marvel feels less like a genius and more like a clumsy intern.

Welcome to the real work of building RAG systems. The “hello world” version is easy. The production-grade version that doesn’t fall over when a user looks at it funny? That’s a different beast. Let’s dive into the upgrades that take your RAG from a fragile prototype to a robust powerhouse.

Upgrade 1: Your Retriever is a Dumb Metal Detector. Let’s Give It a Brain.#

The single biggest failure point in a naive RAG is the ‘R’, the retrieval. Your first attempt probably just does a simple semantic search. That’s a decent start, but it’s like using a metal detector to find a specific coin in a junkyard. It finds stuff that’s generally similar, but often misses the mark.

Fix #1: Hybrid Search#

Pure semantic search is great for understanding the meaning of a query, but it can be surprisingly bad with specific keywords, acronyms, or product codes. Your user types “Error Code: 4815162342” and the semantic search goes, “Hmm, you seem to be interested in numerical sequences and technical issues.” Not helpful.

Hybrid search is the answer. It combines the best of both worlds:

Keyword Search (like BM25): The old-school, reliable method that’s fantastic at finding exact matches for specific terms.

Semantic Search: The modern approach that’s great for understanding the intent and context behind a query.

By running both and intelligently merging the results, you get a system that can understand “tell me about our database connection pooling issues” and also pinpoint the exact log file mentioning DB-CONN-POOL-ERR-8675309.

Fix #2: Add a Reranker#

Your retriever’s job is to be fast and cast a wide net. It should quickly fetch a bunch of potentially relevant documents (say, the top 25). But fast doesn’t always mean accurate.

A reranker is a second, more powerful (and slower) model that acts as a quality control inspector. It takes that initial list of 25 documents and scrutinizes each one against the original query. Its only job is to ask, “How truly relevant is this piece of text to this specific question?” It then re-orders the list, pushing the absolute best candidates to the top.

Think of it this way: retrieval is your broad Google search. Reranking is you actually clicking the top 5 links to see which one has the answer. It’s a crucial step for boosting precision.

Upgrade 2: Stop Blaming the User. Fix Their Queries.#

Users don’t write perfect queries. They’re vague, they’re complex, or they’re just plain weird. “Garbage in, garbage out” applies here. Instead of just passing that garbage to your retriever, you can use an LLM to clean it up first. This is called Query Transformation.

Query Expansion: The user asks, “How to handle auth?” The LLM can expand this to “How to handle user authentication, including login, logout, and token management?” providing a richer query for the retriever.

Sub-Question Decomposition: The user asks a multi-part question like, “How does our pricing for the Pro plan compare to the Enterprise plan, and what are the overage fees?” A naive RAG will get lost. A smarter system uses an LLM to break this into three separate questions, retrieves answers for each, and then synthesizes a final response. This single technique can dramatically improve answers to complex queries.

Upgrade 3: From Simple Pipeline to Autonomous Agent#

This is the big leap. A standard RAG is a fixed pipeline: Query → Retrieve → Augment → Generate. It’s a one-way street.

Agentic RAG throws that out the window. An agent is an LLM given a brain and a toolkit. Instead of blindly following a pipeline, it can reason, plan, and use tools to answer a question.

Here’s what that actually means:

Planning: The agent receives the query and creates a multi-step plan. For “Compare product A and B,” the plan might be: 1. Find docs about product A. 2. Find docs about product B. 3. Synthesize the findings and highlight differences.

Tool Use: Your agent isn’t limited to just one retriever. You can give it multiple tools. Maybe it has a vector_search_tool for your tech docs, a sql_database_tool for user data, and an api_call_tool for checking real-time stock prices. The agent chooses the right tool for the job based on the query.

Self-Correction: What if the first retrieval comes back with nothing useful? A naive RAG gives up. An agent can recognize the failure, think “Okay, that didn’t work,” and try something else, like rephrasing the query using one of the transformation techniques we just talked about and running the search again. It’s an iterative, self-healing process.

This is the difference between a simple script and a thinking application. It’s how you go from answering simple questions to tackling complex, multi-faceted research tasks.

Upgrade 4: Your Data is Probably Garbage. Fix That First.#

Here’s an uncomfortable truth: most RAG failures aren’t caused by fancy retrieval algorithms. They’re caused by bad data preparation. You can have the world’s most sophisticated agentic RAG, but if you’re feeding it poorly chunked, inconsistent documents, it’ll still give you garbage answers.

Chunking Strategy Matters More Than You Think#

Your first chunking attempt was probably “split on 500 characters with 50 character overlap.” That’s fine for a demo, but it’s terrible for production.

Semantic Chunking: Instead of arbitrary character limits, break documents at logical boundaries; paragraphs, sections, topics. Libraries like LangChain now support semantic chunking that uses embeddings to detect natural break points.

Context-Aware Chunking: Each chunk should be self-contained. A chunk that says “As mentioned above, the API key should be…” is useless without the context. Add document titles, section headers, and relevant metadata to each chunk.

Multi-Scale Chunking: Store chunks at different granularities. Maybe you have sentence-level chunks for precise retrieval, paragraph chunks for context, and document-level chunks for broad topics. Different queries need different levels of detail.

Clean Your Data Like Your RAG Depends On It#

Normalization: Convert everything to consistent formats. Dates, phone numbers, product codes. Standardize them. Your search will thank you.

Metadata is Gold: Don’t just store text. Add document type, creation date, author, department, confidence level, last updated. This metadata becomes powerful filtering criteria during retrieval.

Content Cleaning: Remove headers, footers, navigation elements, and other noise that dilutes the signal. A chunk that’s 80% boilerplate and 20% actual content will hurt your embeddings.

Upgrade 5: Stop Flying Blind. Measure What Matters.#

You’ve built an impressive RAG system, but how do you know if it’s actually good? “It feels better” isn’t enough when you’re in production.

Automated Evaluation is Non-Negotiable#

Retrieval Evaluation: Track metrics like Mean Reciprocal Rank (MRR), Recall@K, and NDCG. Are you actually retrieving the right documents? Create a golden dataset of question-answer pairs and measure against it regularly.

Answer Quality: Use LLM-as-a-judge evaluation. GPT-5 can score your system’s answers against ground truth for relevance, accuracy, and completeness. It’s not perfect, but it’s consistent and scalable.

Human Feedback Loops: Build thumbs up/down buttons into your interface. Track which answers users found helpful. This real-world feedback is more valuable than any synthetic benchmark.

Production Monitoring#

Retrieval Confidence Scores: Track when your system returns low-confidence results. If confidence drops below a threshold, surface that to users or trigger human review.

Query Pattern Analysis: What types of questions is your system struggling with? Are users asking about topics not covered in your knowledge base? This drives content strategy.

Hallucination Detection: Monitor when your system generates answers that don’t match the retrieved content. Some techniques include consistency checking and fact verification against the source material.

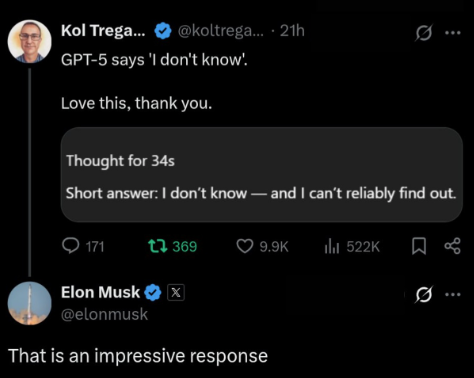

Upgrade 6: Know When to Say “I Don’t Know”#

A RAG system that confidently gives wrong answers is worse than one that admits uncertainty. Teaching your system to be humble is a production necessity, not a nice-to-have.

Confidence Thresholding#

Set minimum confidence thresholds for both retrieval and generation. If your retriever returns documents with low similarity scores, or if your LLM’s generation confidence is low, return a “I couldn’t find sufficient information” response instead of hallucinating.

Query Coverage Analysis#

Build systems to detect when queries fall outside your knowledge base. If someone asks about “Project Falcon” but your documents only cover “Project Eagle,” detect that gap and respond appropriately rather than making something up about Falcon.

Graceful Degradation#

Instead of “I don’t know,” provide helpful alternatives:

- “I couldn’t find specific information about X, but here’s related information about Y…”

- “Based on the documents available to me, I can only find partial information about…”

- “This question might require information not in my knowledge base. You might want to check…”

Upgrade 7: Speed vs. Quality: Welcome to Production Trade-offs#

Your beautiful multi-stage RAG pipeline with reranking and query transformation? It might be taking 8 seconds per query. Users will not wait.

Latency Optimization Strategies#

Caching: Cache embeddings, cache frequent queries, cache reranker results. Redis becomes your best friend.

Parallel Processing: Run retrieval and reranking in parallel where possible. While you’re generating embeddings for the query, start your keyword search.

Staged Retrieval: Use fast, rough retrieval for the first stage (getting 100 candidates), then expensive, precise reranking for the final stage (ranking top 10).

Pre-computation: For common queries or categories, pre-compute and cache results. Your “How do I reset my password?” answer doesn’t need to be generated fresh every time.

Scaling Considerations#

Multi-Modal Indexing: Different document types might need different indexing strategies. PDFs, code, structured data, don’t force everything through the same pipeline.

Distributed Search: As your knowledge base grows, you’ll need distributed vector search. Plan for it early.

Load Balancing: Different queries have different computational costs. A simple FAQ lookup is cheap; a complex multi-document analysis is expensive. Route accordingly.

Upgrade 8: Not Everyone Should See Everything#

In the enterprise world, document access control isn’t optional. Your RAG system needs to respect the same permissions as your file system.

User-Aware Retrieval#

Filtered Search: Before retrieval, filter your knowledge base based on user permissions. Never retrieve documents the user shouldn’t see, even if they’re relevant.

Department-Based Access: Sales shouldn’t see engineering docs, finance shouldn’t see HR records. Implement role-based filtering at the retrieval level.

Dynamic Permissions: Permissions change. That project doc that was public last month might be confidential now. Keep your permission metadata synchronized with your source systems.

Security Considerations#

Audit Trails: Log what users searched for and what documents were accessed. Compliance teams will thank you.

Data Residency: Know where your embeddings and cached data live. Some enterprises have strict requirements about data geography.

Prompt Injection Protection: Users will try to trick your system into revealing information they shouldn’t see. Implement safeguards against prompt injection attacks.

Upgrade 9: Presentation is Half the Battle#

Having the right answer is only half the problem. Presenting it in a way that builds user trust and provides actionable information is the other half.

Citation and Source Attribution#

Always Cite Sources: Every claim should link back to the source document, with page numbers or section references when possible.

Confidence Indicators: Show users how confident the system is. “Based on 3 highly relevant documents” vs “Based on 1 partially relevant document” sets very different expectations.

Source Metadata: Show document dates, authors, and types. A 5-year-old troubleshooting guide has different credibility than last week’s policy update.

Answer Formatting#

Structured Responses: Don’t just return paragraphs. Use bullet points, tables, step-by-step instructions when appropriate.

Progressive Disclosure: Start with a concise answer, then offer “Show more detail” options for users who want to dig deeper.

Multi-Modal Responses: If your knowledge base includes images, charts, or code snippets, surface them alongside text answers.

The Implementation Reality#

Let me be honest: these aren’t just academic improvements. They’re the difference between a system that works in your demo and one that works when your boss’s boss uses it.

Start with hybrid search and reranking. These are the highest-ROI improvements. Most vector databases now support hybrid search out of the box (Weaviate, Pinecone, Elasticsearch). For rerankers, Cohere has an excellent API, or you can use open-source models like ms-marco-MiniLM-L-12-v2.

But here’s the priority order for real production systems:

- Fix your data first (Upgrade 4). No amount of fancy retrieval will save you from bad chunking and dirty data.

- Add measurement and monitoring (Upgrade 5). You can’t improve what you can’t measure.

- Implement hybrid search and reranking (Upgrades 1-2). Highest ROI improvements.

- Handle uncertainty gracefully (Upgrade 6). Better to say “I don’t know” than to hallucinate confidently.

- Optimize for production constraints (Upgrades 7-9). Speed, security, and presentation matter.

- Consider agentic architectures (Upgrade 3). Only when you’ve hit the limits of linear RAG.

The Bottom Line: Production RAG is Systems Engineering#

Building a basic RAG is now table stakes. Building a RAG that survives contact with real users, enterprise security requirements, and production scale? That’s systems engineering.

The difference between a prototype and production isn’t just code, it’s data quality, monitoring, user experience, security, and operational concerns. The companies winning with RAG aren’t the ones with the fanciest algorithms; they’re the ones who’ve solved these unglamorous but critical problems.

Your users don’t care about your embedding model. They care about getting accurate, fast, trustworthy answers to their questions. Everything else is just implementation details.

The tools are evolving rapidly; LlamaIndex, LangChain, specialized vector databases, evaluation frameworks. But the fundamentals remain: clean data, good measurement, graceful failure handling, and respect for production constraints.

The future belongs to RAG systems that are both intelligent and reliable. Make yours one of them.