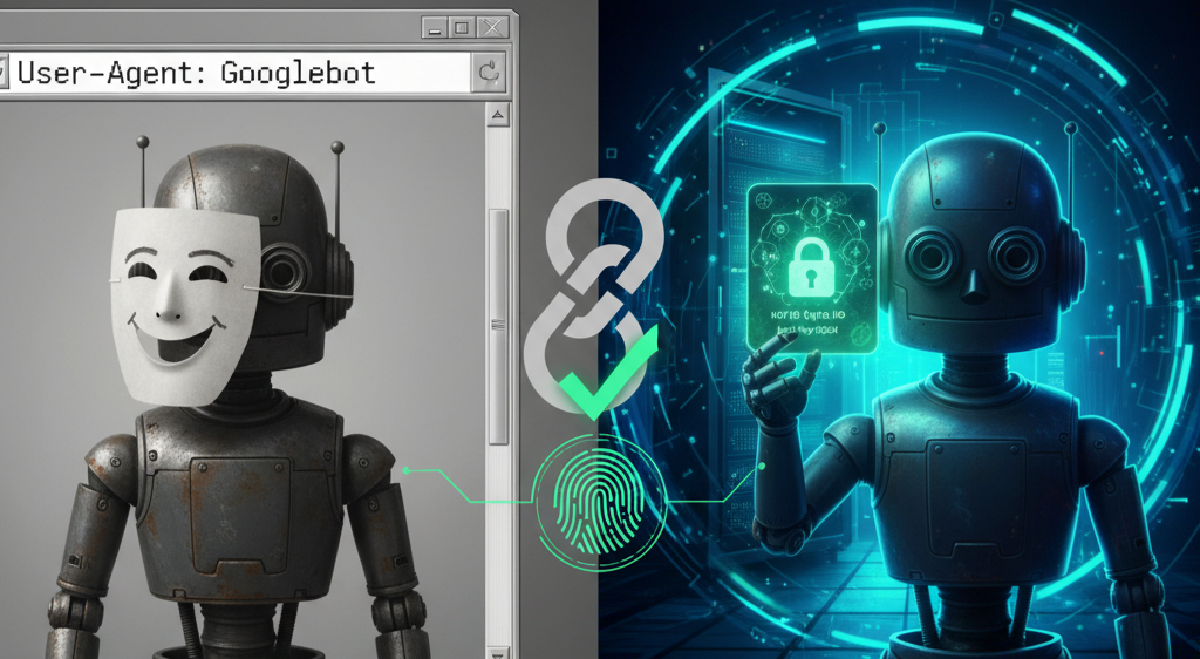

Every website deals with the same problem: bots crawling your site, and absolutely no reliable way to know which ones are legit. That bot claiming to be Googlebot? Could be Google’s actual search infrastructure. Could be a scraper wearing a Googlebot costume. Your only evidence is a User-Agent header that literally anyone can fake with one line of code.

Security reports show bot traffic now makes up over half of all web traffic, and a huge portion involves impersonation. Scrapers pretending to be search engines to bypass rate limits. Malicious actors spoofing legitimate crawlers to find vulnerabilities. And as AI agents become more common and start making purchases, booking services, and accessing sensitive data, the stakes are getting higher while our verification methods are stuck in 1999.

Web Bot Authentication (WBA) is the answer being developed by the IETF (Internet Engineering Task Force, the organization that creates voluntary standards for the Internet since 1986). Instead of trusting what bots claim to be, WBA makes them prove it with cryptographic signatures. Think of it as giving bots a digital ID card that’s mathematically impossible to forge.

If you’re building bots, managing infrastructure that deals with bot traffic, or just trying to figure out where web security is headed, WBA is worth understanding now.

The Problem With How We Verify Bots Today#

Here’s what we’re working with right now, and why it’s broken.

User-Agent strings tell you nothing. Setting User-Agent: Googlebot takes literally one line in any HTTP library. It provides exactly zero security. Yet somehow, we’ve been relying on this for decades.

IP address verification breaks down in modern cloud infrastructure. Legitimate bots use shared hosting. IP ranges change constantly. And reverse DNS lookups? They’re slow, unreliable, and only as trustworthy as DNS itself (which is not very).

robots.txt is basically a suggestion. Good actors respect it. Bad actors ignore it completely. It has zero enforcement mechanism.

We’ve been treating bot verification like a trust exercise when it should be a cryptographic proof.

WBA fixes this. Bots sign their requests with private keys. Servers verify those signatures against published public keys. If the signature is valid, the bot is who they claim to be. If it’s not, you know it’s fake. Same principle that makes HTTPS, SSH, and git commits secure. It works, and it’s about time we applied it to bot traffic.

How WBA Actually Works#

The architecture is surprisingly clean. It builds on existing IETF standards (HTTP Message Signatures and Web Key Discovery) instead of reinventing cryptography, which is always a good sign.

Here’s how it works in practice:

Bot Setup#

The bot operator generates an Ed25519 key pair. You could use other algorithms, but Ed25519 is becoming the default for good reasons: small signatures, fast verification, battle-tested modern crypto.

They publish the public key in a JSON Web Key Set (JWKS) at a well-known URL on their domain:

https://botdomain.com/.well-known/http-message-signatures-directory

This directory file itself gets cryptographically signed to prevent tampering. The bot keeps the private key secure and uses it to sign requests.

Signing Requests#

When the bot makes a request, it adds HTTP headers that contain the cryptographic signature. It looks like this:

GET /page HTTP/1.1

Host: example.com

Signature-Input: sig1=("@authority" "@path" "@method");created=1700000000;keyid="bot-key-2024";alg="ed25519";tag="web-bot-auth"

Signature: sig1=:MEUCIQDexample...:

Signature-Agent: "https://botdomain.com/.well-known/http-message-signatures-directory"

The Signature-Input header specifies what’s being signed: the domain, path, HTTP method, and a timestamp. It also declares the algorithm (alg="ed25519") and tags this as a WBA signature. The timestamp prevents replay attacks (someone capturing your signed request and reusing it later). You can optionally include a nonce parameter for additional replay protection in high-security scenarios.

The Signature header contains the actual cryptographic signature.

The Signature-Agent header points to where the public key lives (note the quotes around the URL, per the spec).

Server Verification#

Your server receives this request and verifies it:

- Extract the

Signature-AgentURL from the headers - Fetch the JWKS from that URL (you cache this aggressively to avoid hitting it every request)

- Find the public key matching the

keyid - Verify the signature against the request components

- Check the timestamp isn’t too old

If everything checks out, you’ve got a verified bot. If anything fails, you treat it as untrusted.

The elegant part: this works with zero pre-registration. Bot operators publish their keys. Server operators can verify any bot implementing the standard. No central certificate authority, no coordination required.

What This Means for Bot Management#

This is where WBA gets interesting from a practical standpoint. It’s not just about verification. It’s about granular control.

With WBA, you can implement policies like:

“Allow all verified bots” for organizations that want maximum crawlability but minimum garbage traffic.

“Block all unverified bots” if you’re dealing with scraping problems and only want to allow bots that can prove their identity.

“Different rate limits for verified vs unverified” so legitimate crawlers get fast access while suspicious traffic gets throttled.

“Per-bot policies” where you allow specific verified bots to access specific endpoints. Maybe you let search engine bots crawl everything, but AI training bots only get access to public content, not user-generated data.

This granular control is the real value proposition. It’s not just about security. It’s about creating a better experience for good bots (what the industry is calling “Agent Experience” or AX) while still defending against bad actors.

Search engines can crawl faster when they’re verified. AI agents gathering data for legitimate purposes get reliable access. Your infrastructure spends less time blocking and rate limiting bots that turn out to be legitimate. Everyone wins.

Real-World Adoption#

WBA is still early, but it’s moving from IETF working group discussions to actual production deployments, and the momentum is picking up.

Cloudflare is leading the charge. They integrated WBA into their Verified Bots program, built a CLI tool (http-signature-directory) for validating bot directories, and published implementation guides. Bot operators can register through Cloudflare’s bot submission form by providing their key directory URL. If you’re using Cloudflare’s bot management today, you can configure rules that treat WBA-verified bots differently from the chaos of unverified traffic.

In October 2025, things got more interesting. Cloudflare partnered with Visa, Mastercard, and American Express to embed WBA into “agentic commerce” protocols. The idea: AI agents making purchases on your behalf need verifiable identities. No one wants their AI assistant buying things if you can’t prove it’s actually your assistant. WBA provides the authentication layer for protocols like Trusted Agent Protocol and Agent Pay.

AWS added WBA support (in preview) to Amazon Bedrock AgentCore Browser. AI agents running through Bedrock can now use WBA to reduce CAPTCHA friction when crawling sites. AWS is collaborating with Cloudflare, Akamai, and HUMAN Security on implementation.

Cloudflare has also proposed an open registry format to decentralize bot discovery beyond their own infrastructure, which could help WBA adoption across the broader ecosystem.

The IETF webbotauth working group remains active, with multiple drafts in progress. No final RFCs yet, but the standard is evolving based on real-world deployment feedback.

But let’s be honest about where we are: most bots aren’t signing requests yet. Most servers aren’t verifying signatures. We’re in that awkward early adopter phase where the spec exists, some tools work, but you can’t count on it being everywhere. If you’re implementing WBA today, you’re betting on where the industry is headed, not following established patterns.

The trajectory looks promising, though. The incentives align for everyone involved, and the AI agent explosion is forcing the issue. When AI agents start making financial transactions and accessing sensitive services, verifiable identity becomes non-negotiable.

The Developer Experience#

If you’re running bots, implementation is straightforward. Generate keys, create a JWKS file, host it at the well-known URL, add signature generation to your HTTP client. The crypto libraries do the heavy lifting.

The harder part is operations. You’re managing cryptographic keys for your infrastructure now. That means:

Key rotation: How often do you rotate? How do you support old and new keys during transitions? The spec gives you flexibility but not specific guidance.

Secure storage: Private keys need to be kept secure. If they leak, someone can impersonate your bot. If you lose them, you’ve lost your bot’s identity.

Failure handling: What happens when signing fails? How do you monitor and alert on verification failures?

These aren’t insurmountable problems, but they’re real operational concerns you need to think through.

If you’re verifying bots on your servers, the experience depends on your setup. Behind Cloudflare? It’s mostly configuration. Rolling your own? You’re implementing signature verification, JWKS caching, error handling, and policy decisions about what to do with verified vs unverified traffic. It’s not hard, but it’s the kind of thing where you spend an afternoon wrestling with openssl and edge cases.

The verification code itself isn’t complex. Crypto libraries handle the heavy lifting. But edge cases will eat your lunch. What do you do when the JWKS URL times out? When signatures are valid but the bot behaves suspiciously? When clocks are slightly out of sync and timestamps are off by just enough to fail validation?

WBA solves authentication (who is this bot), but you still need to solve authorization (what is this bot allowed to do) and reputation (should I trust this bot even though it’s verified).

The Rough Edges#

WBA is useful, but it’s not perfect. The spec has limitations that show its early stage status.

ASCII-only components. Signatures can only cover ASCII header values. If you’re working with internationalized content or non-ASCII paths, parts of your request aren’t protected.

No query parameter coverage. Query strings aren’t in the standard signature. This is actually a reasonable tradeoff (query params are often noise), but it means you can’t cryptographically verify query parameters weren’t modified.

Caching challenges. You need to cache JWKS files (hitting them on every request would be insane), but caching means dealing with invalidation. How long do you cache? How do you handle key rotation? These are left to implementers.

Performance overhead. Signature verification costs CPU cycles. For high-traffic sites dealing with massive bot loads, this could matter. Ed25519 verification is lightweight, but “lightweight” is relative when you’re verifying millions of requests. Even fast crypto adds up at hyperscale. The key is aggressive JWKS caching. Cache the public keys properly, and the overhead becomes manageable. Fetch them on every request, and you’re going to have a bad time.

Mixed adoption period. During the transition, you’re running two systems: WBA for bots that support it, legacy methods for everything else. This operational complexity is unavoidable but annoying.

Where This Is Headed#

The IETF working group is actively iterating on the spec. Expect refinements to key rotation guidance, directory formats, and possibly extensions for more complex scenarios like multi-agent systems or delegated signing.

The bigger question is adoption. WBA succeeds if it reaches critical mass. That means major bot operators (Google, Microsoft, OpenAI, Anthropic) need to implement it. Major platforms need to verify it. Infrastructure providers need to support it.

The incentives are aligned. Bot operators want reliable access and better treatment. Server operators want trustworthy verification. Infrastructure providers want scalable bot management solutions. WBA gives everyone something they need.

And honestly, the timing is perfect. As AI agents become more autonomous and common, verifiable bot identity shifts from “nice feature” to “critical requirement.” When AI agents are making purchases, accessing sensitive data, and acting on behalf of users, knowing exactly who they are becomes essential for security and compliance.

We’re past the point where we can keep trusting User-Agent headers and hoping for the best.

What You Should Do About This#

If you’re building bots, start paying attention. Implementing WBA now positions you well for when verification becomes standard practice. It’s backwards compatible (servers that don’t understand the headers just ignore them), so there’s minimal downside.

If you’re managing infrastructure, think about how WBA fits into your bot management strategy. You don’t need to block unverified bots immediately, but you can start logging and tracking verified vs unverified traffic to understand the patterns.

If you’re designing APIs, consider how bot authentication fits into your security model. WBA tells you who the bot is. You still need to decide what they’re allowed to do.

The spec lives at datatracker.ietf.org/wg/webbotauth. The architecture and protocol documents are readable and pragmatic. Cloudflare’s documentation has the most mature implementation examples if you want to see real code.

Pro tip: If you’re setting up a bot directory, use Cloudflare’s http-signature-directory CLI tool to validate it before going live. It catches the kind of formatting issues that will make verification fail silently, and nobody wants to debug why their bot signatures aren’t working when it’s just a missing quote or wrong key format.

The Bottom Line#

WBA turns bot authentication from a trust exercise into cryptographic proof. It’s not perfect, and it won’t solve every bot problem. Sophisticated attackers who compromise legitimate bot infrastructure can still cause damage. Verified bots can still misbehave.

But it solves the foundational problem: proving bot identity. Turning “this bot claims to be X” into “this bot provably is X.” In a world drowning in automated traffic where you can’t trust anything, that’s valuable.

The web has needed this for a long time. We’ve been living with easily spoofed User-Agent headers because we had nothing better. Now we have something better. The question is whether the industry adopts it fast enough to matter.

If you’re dealing with bot traffic in any serious way, WBA should be on your radar. The cryptographic handshake for bots is finally here.