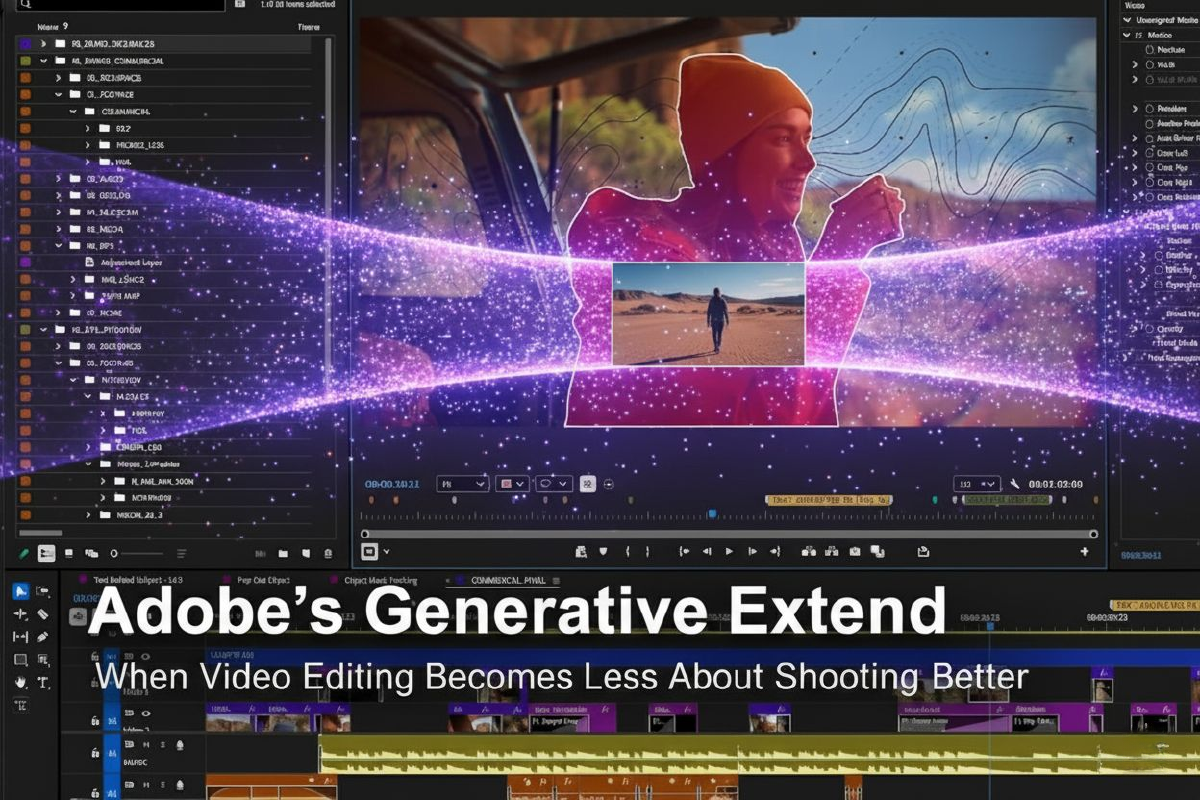

Adobe’s Generative Extend for Premiere Pro does exactly what it says: it extends your video clips by generating new frames at the beginning or end. Clip too short? Click and drag. Need to smooth a transition? The AI fills the gap. Hold a shot longer for better timing? Done.

It’s built into the Premiere Pro timeline and powered by Adobe’s Firefly Video Model. The use cases are immediately obvious for anyone who’s edited video professionally: covering awkward cuts, stretching b-roll to match narration timing, fixing clips that end just a second too early. These are real problems that normally require reshoots, stock footage, or creative workarounds.

What It Changes#

Here’s where it gets interesting. Video editing used to be about working with what you captured. You shot extra footage, planned for coverage, and edited within the constraints of your material. Generative Extend shifts that—now you’re creating footage you never shot.

That’s useful, but it changes the relationship between shooting and editing. If you can generate those extra frames, why stress about getting clean entrances and exits on every shot? Why shoot more coverage when AI can bridge the gaps?

The answer, of course, is quality. AI-generated frames work for extending backgrounds or holding on static shots. But complex motion, detailed subjects, or scenes where continuity matters? Those still require the real thing. Generative Extend solves specific problems well but doesn’t replace good shooting.

The Practical Reality#

For working editors, this is a time-saver, not a game-changer. It handles the edge cases that used to eat up time—the two-second gap you can’t fill, the transition that’s almost smooth but not quite. It’s not redefining editing workflows; it’s removing friction.

What’s worth watching is how this changes expectations. When clients know clips can be extended, will they expect every shot to be perfectly timed without reshoots? When budgets are tight, will “fix it with AI” become the default instead of “shoot it properly”?

Generative Extend is a practical tool solving real problems. Just don’t mistake convenience for craft.

Learn more: Read Adobe’s official announcement about Generative Extend.