Cursor just solved one of the most annoying problems in AI-assisted development: how do you share complex prompts with your team?

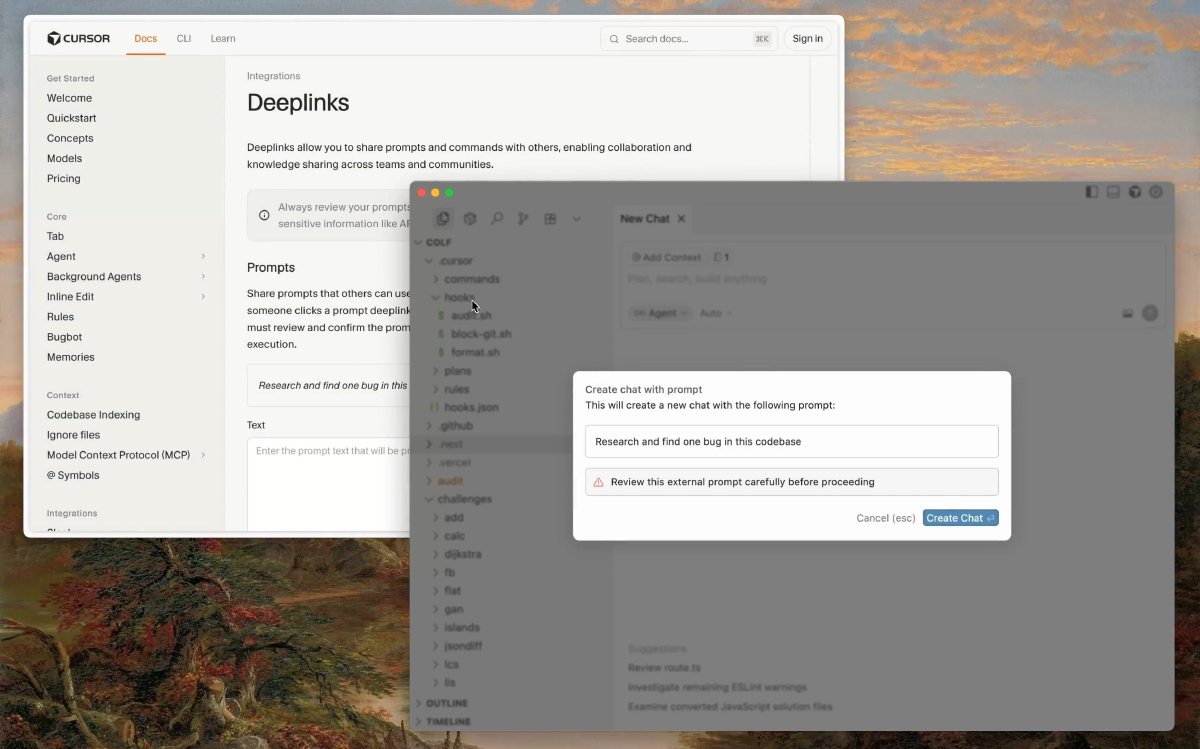

Version 1.7 shipped deeplinks for shareable prompts. Not just text snippets — full context packages that automatically attach the right files, apply team rules, and set up the exact environment you need.

This isn’t another link-sharing feature. It’s Cursor betting that standardized AI workflows are the next evolution of team development.

What Cursor deeplinks actually do#

Think of deeplinks as bookmarkable AI workflows:

🔗 One-click setup#

- Share a URL: Team member clicks, gets the exact prompt setup

- Auto-attach files: No more “make sure to include these 5 files in context”

- Apply rules: Your

.cursorrulesautomatically load with the prompt - Set context: The AI gets the same background info every time

📋 Use cases they’re targeting#

- Setup instructions: “Run this link to configure your dev environment”

- Code review templates: Standard prompts for security, performance, accessibility checks

- Documentation workflows: “Generate docs for this API using our standards”

- Troubleshooting guides: Diagnostic prompts with the right context files attached

How it actually works#

Based on the official documentation, here’s the technical flow:

The deeplink structure#

cursor://prompt?text=your-prompt&files=file1.js,file2.py&rules=true

What happens when clicked#

- Cursor opens to the exact project context

- Files attach automatically to the conversation

- Rules load from your

.cursorrulesfile - Prompt populates in the chat input

- Team member just hits enter

MCP integration ready#

This connects to Cursor’s bigger MCP (Model Context Protocol) strategy. Your deeplinks can reference:

- Database schemas via MCP servers

- Jira tickets and project context

- Documentation repositories

- Team-specific knowledge bases

Why this matters for teams#

This is Cursor acknowledging that AI workflows need to be repeatable:

Before deeplinks#

- Slack: “Hey, can you use AI to review this PR for security issues?”

- Response: “Sure, which files should I include? What should I ask it to look for?”

- Back-and-forth about context, specific concerns, coding standards

- Inconsistent results because everyone prompts differently

After deeplinks#

- Slack: “Security review: [cursor://security-review?files=auth.js,db.py…]”

- Click, enter, done

- Same prompt, same context, same standards every time

The bigger picture: Workflow standardization#

As I covered in my deep-dive on AI IDEs, the real value in these tools isn’t the models — it’s the workflow integration.

Deeplinks represent Cursor’s bet that:

Teams will standardize AI interactions#

Instead of everyone having their own prompting style, successful teams will develop canonical workflows for common tasks:

- Code review checklists

- Architecture decision templates

- Debugging runbooks

- Documentation standards

Context becomes shareable#

Your team’s collective knowledge — the rules, the gotchas, the “always check this” reminders — can now travel with the prompts.

AI becomes a team capability, not individual skill#

Instead of “Sarah is good at prompting,” it becomes “our team has good prompts.”

The competitive landscape#

This puts Cursor ahead of alternatives in team adoption:

vs GitHub Copilot#

Copilot has Spaces for shared context, but deeplinks make sharing specific workflows one-click instead of multi-step.

vs Windsurf#

Windsurf focuses on MCP server integration for enterprise data, but deeplinks solve the “how do we share this workflow” problem more directly.

vs JetBrains AI Assistant#

Still catching up on workflow sharing features. Their enterprise controls are solid, but team workflow standardization isn’t there yet.

What’s still beta about it#

Cursor calls this a beta feature, which likely means:

🚧 Limited customization#

Current deeplinks probably support basic prompt + files + rules. Advanced context manipulation might come later.

🔒 Security considerations#

Sharing prompts that automatically attach files raises data governance questions. Enterprise controls are probably still evolving.

📊 No analytics yet#

Teams will want to know: which prompts get used? What workflows are most effective? That tracking probably comes later.

Real-world adoption patterns#

Based on how teams typically adopt new workflow tools:

Early adopters (now)#

- Setup automation: New team member onboarding prompts

- Documentation: Standard prompts for generating API docs, README updates

- Code review: Security, accessibility, and performance check templates

Next 6 months#

- Architecture decisions: Standard prompts for evaluating technical tradeoffs

- Debugging workflows: Diagnostic prompts with environment context attached

- Compliance checks: Automated prompts for regulatory requirements

Enterprise adoption#

- Audit trails: Who used which prompts when

- Template management: Centralized prompt libraries with version control

- Integration with existing tools: Deeplinks in Slack, documentation sites, issue trackers

Why this might actually stick#

Previous attempts at “shareable developer workflows” often failed because they:

- Required too much setup overhead

- Didn’t integrate with existing tools

- Lost context in translation

Cursor deeplinks avoid these traps:

- One-click sharing removes friction

- Native IDE integration meets developers where they work

- Context preservation ensures workflows actually work when shared

What teams should do now#

If you’re using Cursor:

- Start simple: Create deeplinks for your most common AI tasks

- Document in your wiki: Make these workflows discoverable

- Iterate based on usage: See which prompts actually get reused

- Build your team’s prompt library gradually

If you’re evaluating AI IDEs: Consider how important workflow standardization is for your team. If you want consistent AI interactions across your team, deeplinks might be a deciding factor.

The uncomfortable question#

Deeplinks raise an interesting challenge: who owns the prompts?

When your team develops great AI workflows, are those:

- Individual contributor skills that people take when they leave?

- Team assets that should be documented and preserved?

- Company intellectual property that needs governance and control?

Cursor’s deeplinks push teams toward treating AI workflows as shared team assets. That’s probably the right direction, but it requires thinking about AI prompts like any other team documentation.

What’s next#

Expect other AI IDEs to copy this quickly. The pattern is too useful:

- Windsurf will probably integrate with their MCP architecture

- Copilot will tie it to GitHub workflow templates

- JetBrains will add enterprise controls and audit logging

But Cursor shipped first, and first-mover advantage matters for team adoption tools.

Try it out: Check the official deeplinks documentation for setup instructions, or explore the version 1.7 changelog for other new features.

For more context on how AI IDEs work under the hood, see my comprehensive breakdown of the technical architecture behind these tools.