GitHub has launched Bring Your Own Key (BYOK) for Copilot in public preview, giving Enterprise and Business customers the flexibility to connect their own large language model provider API keys directly to GitHub’s AI assistant.

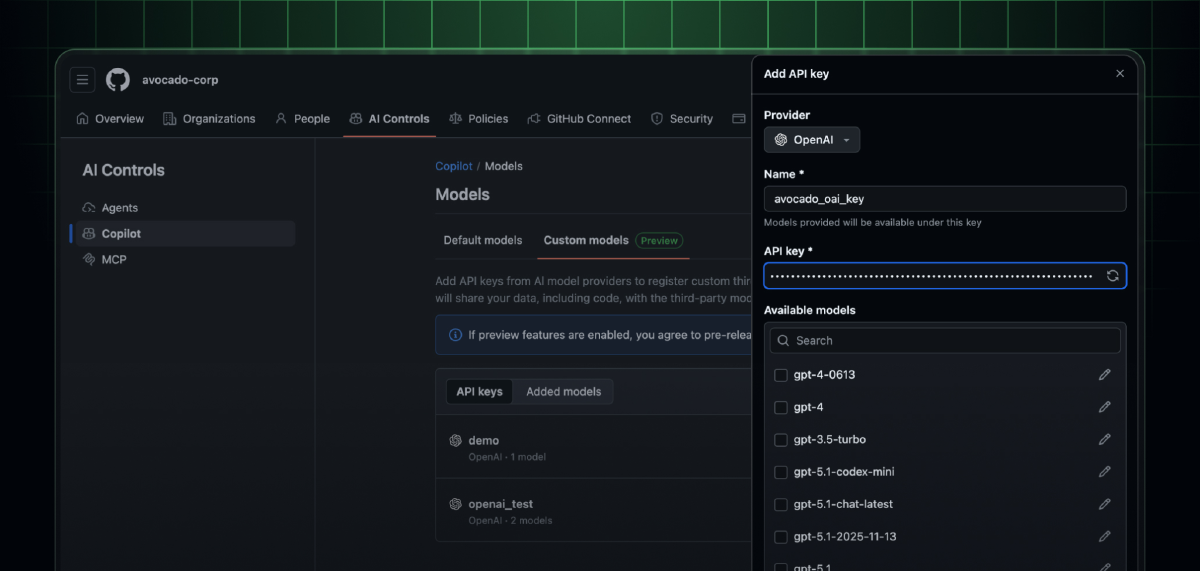

Connect Your Preferred LLM Provider#

Enterprise developers can now connect API keys from Anthropic, OpenAI, xAI, and Microsoft Foundry to GitHub Copilot. Once connected, all models tied to that key become available in Copilot Chat on github.com and across supported IDEs including VS Code, JetBrains, Eclipse, and Xcode.

The feature addresses a long-standing request from enterprise teams who already maintain relationships with specific LLM providers or have custom models tailored to their workflows. Rather than being limited to GitHub-hosted models, organizations can now leverage their existing AI investments within the Copilot experience.

Centralized Administration#

BYOK introduces granular administrative controls at both enterprise and organization levels. Enterprise admins can add API keys and determine which organizations can access connected models. Organization admins then configure access within their teams—enabling, disabling, or customizing model availability from a single settings area.

This centralized approach ensures consistent governance while allowing teams to adapt quickly as requirements evolve. The feature works across all major Copilot modes: Agent, Plan, Ask, and Edit.

Billing Stays With Your Provider#

A significant advantage of BYOK is its billing model. API calls made through connected keys are billed directly by your chosen provider according to their terms—they don’t count against GitHub Copilot usage quotas. Teams can leverage existing contracts, enterprise agreements, or credits they’ve already negotiated with AI providers.

Known Limitations#

The current implementation does not support OpenAI’s Responses API. Models configured to use a Responses endpoint won’t work with BYOK; the feature requires the OpenAI Completions API instead.

Getting Started#

BYOK is available now in public preview for GitHub Enterprise and Business subscribers. Administrators can connect their LLM provider’s API key through enterprise or organization settings to begin using custom models immediately. GitHub is actively seeking feedback through its community forums to shape future development of the feature.