Google just expanded Opal, its no-code AI app builder, to 15 new countries. The tool lets people create AI applications without writing a single line of code, opening up AI development to a much broader audience.

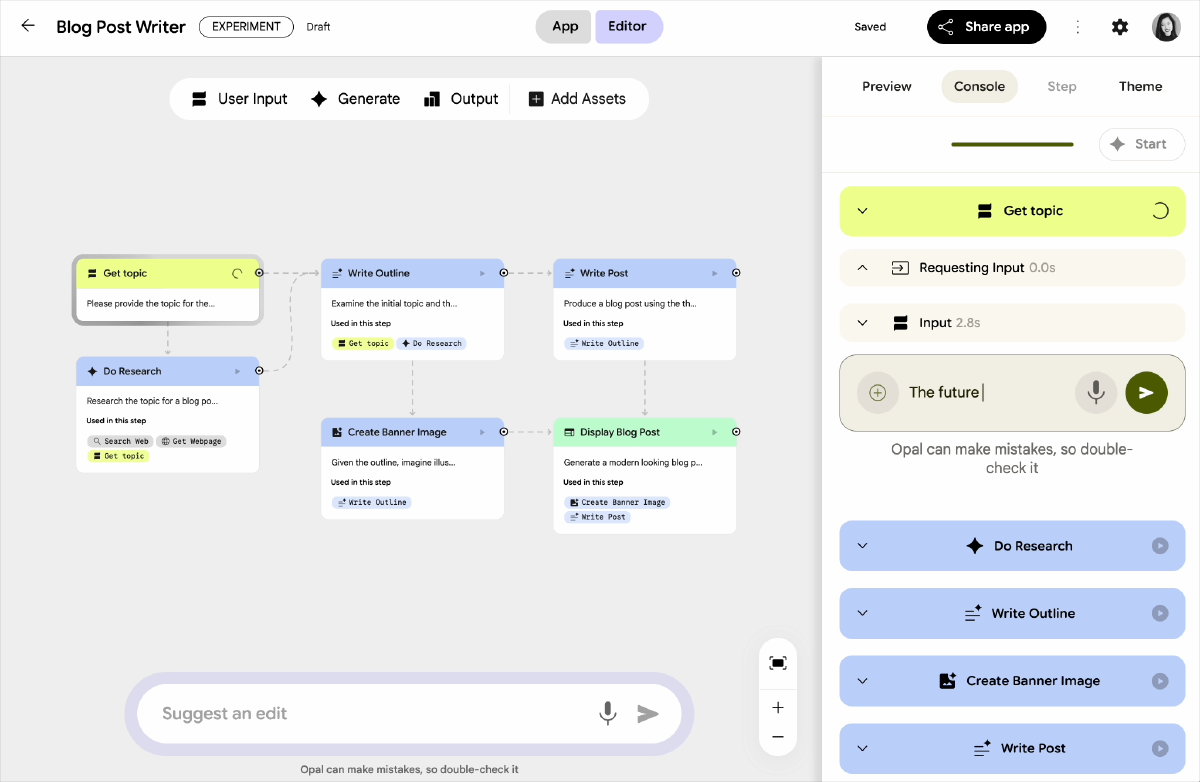

Opal provides a visual interface where users can drag and drop components, connect data sources, and define logic through simple menus and prompts. The goal is removing technical barriers so domain experts, business users, and creative professionals can build AI tools for their specific needs without depending on engineering teams.

The expansion is part of Google’s broader strategy to democratize AI. By making development accessible to non-programmers, they’re betting on unlocking innovation from people who understand problems deeply but lack traditional coding skills. A healthcare worker might build a patient management tool. A teacher might create a personalized learning assistant. The possibilities multiply when more people can participate.

For Google, wider adoption means more users in their ecosystem, more data flowing through their infrastructure, and stronger positioning against competitors also racing to simplify AI development. It’s good business wrapped in the language of empowerment.

But there’s justified concern about what happens when everyone can build AI without understanding how it works. Code quality, security vulnerabilities, ethical considerations, and basic software engineering principles aren’t automatically encoded in no-code tools. Accessibility without education could lead to poorly designed systems that cause more problems than they solve.

The Paradox of Democratization#

Making powerful technology accessible is genuinely important. Gatekeeping serves the gatekeepers, not the community. But there’s a difference between democratizing access and assuming that access alone creates competence.

Some skills require depth that can’t be abstracted away. When we make things too easy, we risk creating the illusion of expertise without its substance. The person who builds an AI system should understand its limitations, failure modes, and potential harms, not just how to click buttons in a visual interface.

True democratization isn’t just about access to tools. It’s about access to knowledge, context, and the wisdom to use power responsibly. Otherwise, we’re not empowering people. We’re just making it easier to create problems at scale.