Google expanded Opal from 15 countries to more than 160. That’s 10x growth in weeks. The no-code AI builder lets anyone create mini-apps using natural language—no programming required.

The promise: describe what you want, Opal assembles it. Automated workflows, content generators, rapid prototypes. All built through conversation powered by Gemini.

I tested it using Google’s featured gallery examples. The platform works. But testing revealed something more important than whether individual examples succeed or fail.

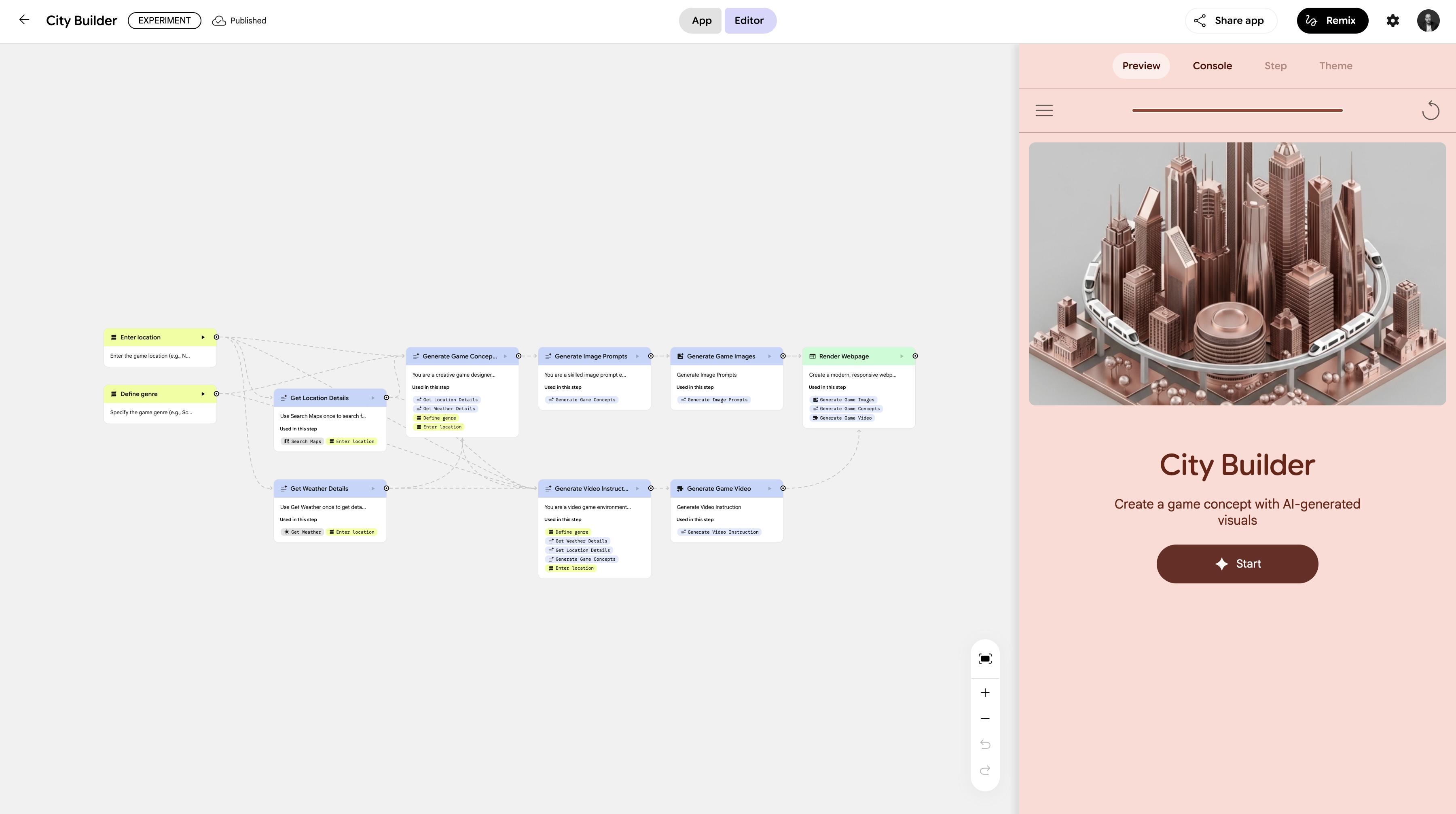

Test 1: City Builder#

First example: “City Builder.” Promise: “Create a modern, responsive webpage to showcase a game concept with prominent game description, visually engaging key art display, and embedded trailer video.”

Simple inputs. City name: Tel Aviv. Genre: quest.

Five minutes later: a marketing page for “Tel Aviv Quest.” Players navigate Carmel Market, solve puzzles at the Tel Aviv Museum of Art, explore mysteries at Jaffa Clock Tower. Generated text, image, video.

Opal executed what it was asked to do. The platform worked. But the implementation—what the creator designed it to generate—wasn’t particularly useful. See the output here.

Not a playable game. Not professional enough for investors. Acceptable for a five-minute experiment, nothing more.

Test 2: Generated Playlist#

Second test: “Generated Playlist.” Promise: “Create a playlist for your current mood with YouTube links.”

My input: “I’m in a relaxed weekend mood, sitting at my desk reading tech news and working on my blog. I’d love a playlist with classic and modern rock—mostly from the 1970s to the early 2000s. Something energetic but still easy to listen to while I write.”

Result: Nicely designed page. Five songs. Dire Straits, Fleetwood Mac, The Police, R.E.M., Coldplay. Good choices. See it here.

The problem: None of the YouTube links worked. They routed through vertexaisearch.cloud.google.com/grounding-api-redirect/ URLs that led nowhere.

So I edited the workflow. Added one instruction: “Verify that the YouTube link is actually accessible.”

Result: 1 out of 5 links now worked. Progress, but not exactly impressive.

The Real Lesson#

This isn’t about whether Opal works. The platform does what it promises. You describe a workflow, it builds it.

This is about something more fundamental: AI still needs human verification.

When I coded, I reviewed output. When I consult with AI, I verify responses. With Opal, I needed to test the workflow, find the flaw, adjust the prompt, test again.

Trust, but verify. Someone said that once. Maybe that’s the story about AI, at least in this age.

The platform can’t verify whether YouTube links actually work. It generates what you tell it to generate. If your instructions are incomplete or the AI’s implementation is flawed, you get incomplete or flawed output.

That’s not a bug. That’s the current state of AI.

What’s Missing#

I wanted the ability to enter manual code to check things. To override AI decisions when I knew they were wrong. To verify outputs programmatically instead of clicking through five links.

Opal doesn’t offer that. It’s conversational interface or nothing. For non-technical users, that’s the point. For anyone who knows code, it’s limiting.

This raises questions about who Opal serves best:

- Non-technical users get accessibility but lack verification skills

- Technical users get speed but lack control when AI fails

- Both need to manually check outputs the AI can’t verify itself

What This Means#

Google is positioning Opal as an alternative to Zapier and n8n. Simple automation without technical knowledge.

The platform delivers on that technically. But it doesn’t solve the verification problem. You still need to check whether outputs actually work. And if you’re non-technical, how do you even know what to verify?

That’s not an Opal problem. That’s an AI problem. Current AI can generate. It can’t reliably verify what it generates works correctly in real-world conditions.

The Bigger Picture#

This expansion to 160+ countries signals investment. Google wants Opal to scale globally. The platform can support that technically.

But the verification problem doesn’t scale. Whether you’re one user or one million, someone needs to check whether outputs actually work. AI can’t do that reliably yet.

Google’s track record adds uncertainty. Reader, Wave, Inbox, Hangouts—dozens of products killed when priorities shifted. Opal lives in Google Labs. Experimental. No guaranteed future.

For personal experiments? Worth trying. Fast, free, functional. But verify everything.

For business-critical workflows? Not yet. The abandonment risk and verification requirements are real.

The platform works. The AI generates what you ask for. But trust, then verify. Always verify.