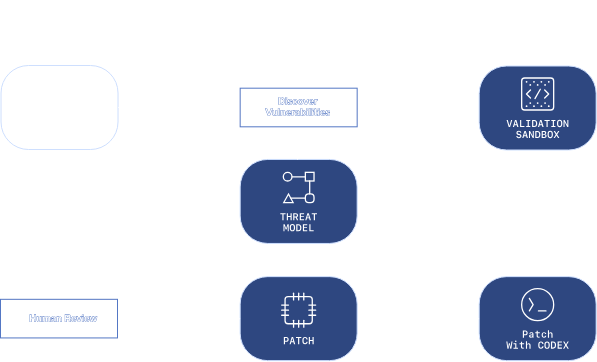

OpenAI just introduced Aardvark, an AI security researcher designed to autonomously hunt for vulnerabilities in your codebase. Unlike traditional security tools that rely on fuzzing or pattern matching, Aardvark uses large language model-powered reasoning to understand how code actually behaves—more like a human security expert than a rule-based scanner.

The pitch is compelling: Aardvark continuously monitors code repositories, analyzing changes as they happen to detect potential security issues before they reach production. When it finds something, it doesn’t just flag it—it proposes targeted fixes that understand the context of your code.

This matters because the scale of the vulnerability problem is overwhelming. Tens of thousands of new vulnerabilities emerge every year, and security teams can’t possibly keep pace manually. An AI that works 24/7, never gets tired, and can analyze changes instantly could fundamentally shift the balance in software security.

But there’s a tension here worth thinking about. When we automate vulnerability discovery at this scale, we’re not just helping defenders. We’re also creating tools that could potentially be repurposed by attackers. The same reasoning that finds vulnerabilities to fix them could theoretically find vulnerabilities to exploit them.

OpenAI’s bet is that the defensive advantage outweighs the risk—that getting patches out faster matters more than the marginal increase in discovery capability for sophisticated attackers. They’re probably right. Most security breaches exploit known vulnerabilities, not zero-days.

The real question is whether autonomous security tools like Aardvark will complement human expertise or gradually replace it. Security research requires judgment, context, and understanding of business impact—things AI still struggles with. For now, Aardvark looks like a powerful force multiplier for security teams, not a replacement.

The future of software security is likely a collaboration between AI speed and human judgment. Aardvark is OpenAI’s opening move in that direction.

Learn more: Check out the official announcement to explore Aardvark’s capabilities, or sign up for the private beta if you want early access.